Solo estamos probando y jugando, no apto para PROD

Quiero hacer pequeñas pruebas con el framework wrk y JMeter a ver que vemos

Instalar wrk

Para instalar wrk(un framework open source escrito en C, para benchmarking), en linux es fácil:

sudo apt install wrkSe puede instalar también en WSL de Guindow$

Uso básico por ejemplo:

wrk -t4 -c200 -d30s http://localhost:8080/api/hello| Flag/Parametro | Significado | Ejemplo |

|---|---|---|

|

Número de threads de carga generados por |

4 threads |

|

Número total de conexiones concurrentes (usuarios simultáneos) |

200 conexiones |

|

Duración del test |

30 segundos |

-

Repositorio: wrk

Entorno

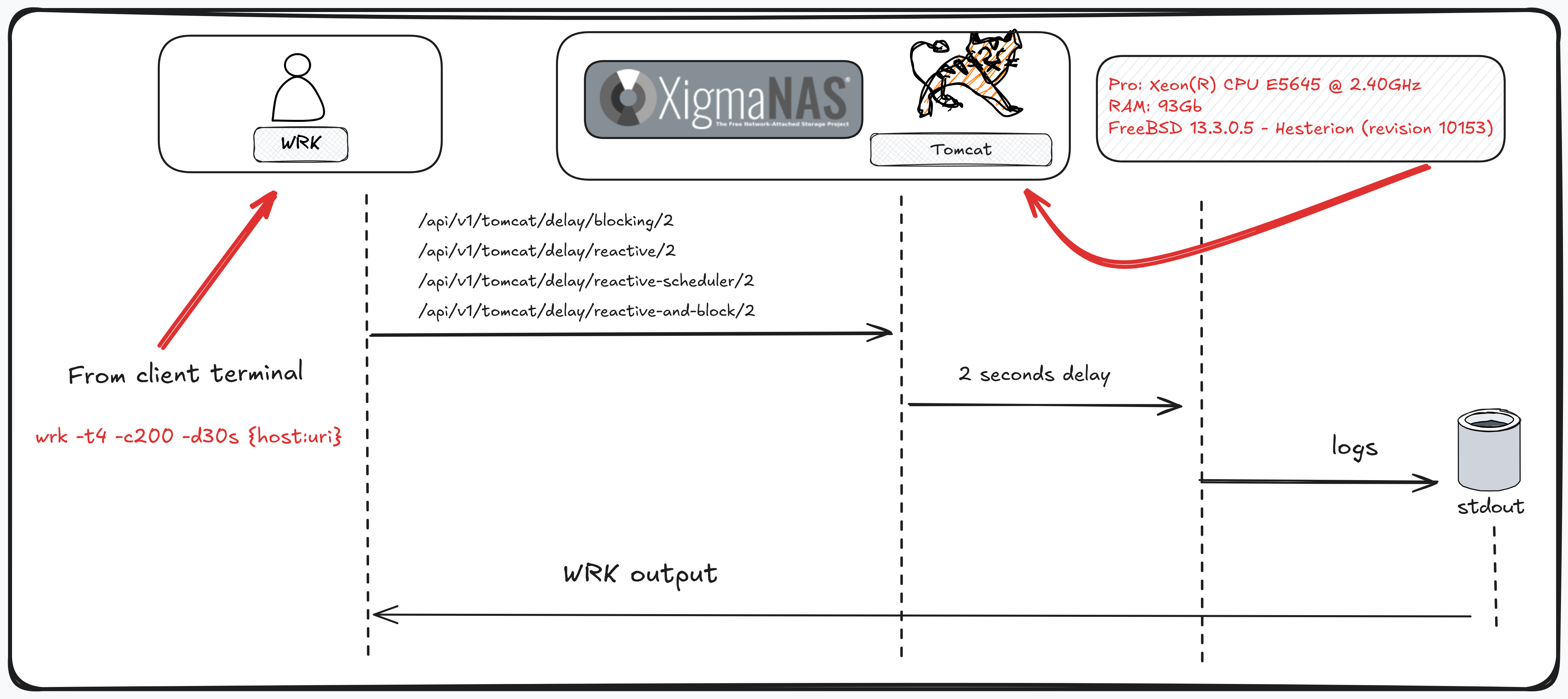

Pretendo probar un stack con spring mvc y spring webflux, en mi NAS y en (OCI) Oracle Cloud Infrastructure, entonces crear un simple standalone(.jar) con un controller.

Para eso se debe ajustar el pom.xml para que se pueda levantar el micro con Tomcat o Netty(más precisamente Reactor netty, que es netty pero con backpressure).

Operador block()

Como sabemos seguro, con el operador block() de project reactor el stream reactivo se convertirá en algo simplemente síncrono(lo cual no explota su uso para el que fue creado originalmente), y también crearé un endpoint que lo use, para ver su comportamiento.

@Log4j2

@RestController

public class MyController {

//Enndpoint bloqueante (usa Thread.sleep) (1)

@GetMapping("/delay/blocking/{seconds}")

public ResponseEntity<String> delayBlocking(@PathVariable int seconds) {

try {

final long secs = seconds * 1000L;

Thread.sleep(secs);

log.info("blocking seconds: {}*1000L: {}", seconds, (secs));

} catch (InterruptedException e) {

Thread.currentThread().interrupt();

}

return ResponseEntity.ok("OK (blocking) after " + seconds + "s on " + serverType());

}

//Endpoint reactivo (no bloqueante) (2)

public Mono<String> delayReactive(@PathVariable int seconds) {

return Mono.delay(Duration.ofSeconds(seconds))

.map(i -> "OK (reactive) after " + seconds + "s on " + serverType())

.log();

}

//Endpoint reactivo con el operador block() (3)

@GetMapping("/delay/reactive-and-block/{seconds}")

public String delayReactiveWithBlock(@PathVariable int seconds) {

return Mono.delay(Duration.ofSeconds(seconds))

.map(i -> "OK (reactive with block()) after " + seconds + "s on " + serverType())

.log()

.block();

}

//Endpoint reactivo con boundedElastic (4)

@GetMapping("/delay/reactive-scheduler/{seconds}")

public Mono<String> delayReactiveWithCustomScheduler(@PathVariable int seconds) {

return Mono.fromCallable(() -> "OK (reactive with boundedElastic()) after " + seconds + "s on " + serverType())

.subscribeOn(Schedulers.boundedElastic())

.delaySubscription(Duration.ofSeconds(seconds))

.log();

}

private String serverType() {

return (ClassUtils.isPresent("org.apache.catalina.startup.Tomcat", null))

? "Tomcat"

: "Reactor Netty";

}

}| 1 | Enndpoint bloqueante (usa Thread.sleep) |

| 2 | Endpoint reactivo (no bloqueante) |

| 3 | Endpoint reactivo pero usando el operador block() |

| 4 | Endpoint reactivo (no bloqueante) con el Scheduler boundedElastic [1]wrap-blocking. |

Y simplemente en el pom.xml sería:

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-webflux</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>block() en netty

Netty odia este operador, porque la idea es no añadir contención en el event-loop [2]webflux-concurrency-model.

//Endpoint reactivo con el operador block()

@GetMapping("/delay/reactive-and-block/{seconds}")

public String delayReactiveWithBlock(@PathVariable int seconds) {

return Mono.delay(Duration.ofSeconds(seconds))

.map(i -> "OK (reactive with block()) after " + seconds + "s on " + serverType())

.log()

.block(); (1)

}| 1 | block() a secas 😂 |

2025-10-22T15:44:15.527+02:00 ERROR 34105 --- [or-http-epoll-5] a.w.r.e.AbstractErrorWebExceptionHandler : [36cf9722-2] 500 Server Error for HTTP GET "/delay/reactive-and-block/2"

java.lang.IllegalStateException: block()/blockFirst()/blockLast() are blocking, which is not supported in thread reactor-http-epoll-5

at reactor.core.publisher.BlockingSingleSubscriber.blockingGet(BlockingSingleSubscriber.java:87) ~[reactor-core-3.7.11.jar:3.7.11]

Suppressed: reactor.core.publisher.FluxOnAssembly$OnAssemblyException:

Error has been observed at the following site(s):

*__checkpoint ⇢ HTTP GET "/delay/reactive-and-block/2" [ExceptionHandlingWebHandler]

Original Stack Trace:Para cambiar puerto de springboot en runtime

mvn spring-boot:run -Dspring-boot.run.arguments=--server.port=8000En caso de correr el propio .jar hacemos un

java -jar tomcat-performance.jar --server.port=8081 (1)| 1 | 8081 el puerto, y lo cambiamos por el que queramos. |

Iniciando pruebas

Server Tomcat

Un simple curl es este:

curl --location 'http://192.168.1.219:8081/api/v1/tomcat/delay/blocking/2'Estas son las url que invocariamos con wrk

wrk -t4 -c200 -d30s http://192.168.1.219:8081/api/v1/tomcat/delay/blocking/2

wrk -t4 -c200 -d30s http://192.168.1.219:8081/api/v1/tomcat/delay/reactive/2

wrk -t4 -c200 -d30s http://192.168.1.219:8081/api/v1/tomcat/delay/reactive-scheduler/2

wrk -t4 -c200 -d30s http://192.168.1.219:8081/api/v1/tomcat/delay/reactive-and-block/2Pruebas con cable UTP directo a mi switch NAS

Si, mi switch tiene una config de jumbo frame y LAGG

-

/api/v1/tomcat/delay/blocking/2

rubn ⲁƛ ▸ wrk -t4 -c200 -d30s http://192.168.1.219:8081/api/v1/tomcat/delay/blocking/2

Running 30s test @ http://192.168.1.219:8081/api/v1/tomcat/delay/blocking/2

4 threads and 200 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 0.00us 0.00us 0.00us -nan%

Req/Sec 64.96 106.82 490.00 86.11%

2800 requests in 30.07s, 399.22KB read

Socket errors: connect 0, read 0, write 0, timeout 2800

Requests/sec: 93.11

Transfer/sec: 13.28KB-

/api/v1/tomcat/delay/reactive/2

rubn ⲁƛ ▸ wrk -t4 -c200 -d30s http://192.168.1.219:8081/api/v1/tomcat/delay/reactive/2

Running 30s test @ http://192.168.1.219:8081/api/v1/tomcat/delay/reactive/2

4 threads and 200 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 0.00us 0.00us 0.00us -nan%

Req/Sec 58.20 45.26 161.00 81.48%

2800 requests in 30.07s, 399.22KB read

Socket errors: connect 0, read 0, write 0, timeout 2800

Requests/sec: 93.11

Transfer/sec: 13.28KB-

/api/v1/tomcat/delay/reactive-and-block/2

rubn ⲁƛ ▸ wrk -t4 -c200 -d30s http://192.168.1.219:8081/api/v1/tomcat/delay/reactive-and-block/2

Running 30s test @ http://192.168.1.219:8081/api/v1/tomcat/delay/reactive-and-block/2

4 threads and 200 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 0.00us 0.00us 0.00us -nan%

Req/Sec 31.68 61.87 490.00 98.25%

2800 requests in 30.06s, 434.77KB read

Socket errors: connect 0, read 0, write 0, timeout 2800

Requests/sec: 93.14

Transfer/sec: 14.46KB-

/api/v1/tomcat/delay/reactive-scheduler/2

rubn ⲁƛ ▸ wrk -t4 -c200 -d30s http://192.168.1.219:8081/api/v1/tomcat/delay/reactive-scheduler/2

Running 30s test @ http://192.168.1.219:8081/api/v1/tomcat/delay/reactive-scheduler/2

4 threads and 200 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 0.00us 0.00us 0.00us -nan%

Req/Sec 104.08 92.54 280.00 45.83%

2800 requests in 30.06s, 459.38KB read

Socket errors: connect 0, read 0, write 0, timeout 2800

Requests/sec: 93.16 (1)

Transfer/sec: 15.28KB| 1 | 93.16 request por segundo. |

Pruebas sin cable, por WIFI al NAS

Tengo un router dualband de 2.4Ghz y 5Ghz uffff, que fastidio, jajajaj, pero usare la de 2.4Ghz se supondria que es más lenta.

Un poco extraño, practicamente las mísmas request

-

/api/v1/tomcat/delay/blocking/2

rubn ⲁƛ ▸ wrk -t4 -c200 -d30s http://192.168.1.219:8081/api/v1/tomcat/delay/blocking/2

Running 30s test @ http://192.168.1.219:8081/api/v1/tomcat/delay/blocking/2

4 threads and 200 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 0.00us 0.00us 0.00us -nan%

Req/Sec 52.73 90.38 470.00 89.39%

2800 requests in 30.08s, 399.22KB read

Socket errors: connect 0, read 0, write 0, timeout 2800

Requests/sec: 93.09

Transfer/sec: 13.27KB-

/api/v1/tomcat/delay/reactive/2

rubn ⲁƛ ▸ wrk -t4 -c200 -d30s http://192.168.1.219:8081/api/v1/tomcat/delay/reactive/2

Running 30s test @ http://192.168.1.219:8081/api/v1/tomcat/delay/reactive/2

4 threads and 200 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 0.00us 0.00us 0.00us -nan%

Req/Sec 58.08 106.44 474.00 89.23%

2800 requests in 30.08s, 399.22KB read

Socket errors: connect 0, read 0, write 0, timeout 2800

Requests/sec: 93.10

Transfer/sec: 13.27KB-

/api/v1/tomcat/delay/reactive-and-block/2

rubn ⲁƛ ▸ wrk -t4 -c200 -d30s http://192.168.1.219:8081/api/v1/tomcat/delay/reactive-and-block/2

Running 30s test @ http://192.168.1.219:8081/api/v1/tomcat/delay/reactive-and-block/2

4 threads and 200 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 0.00us 0.00us 0.00us -nan%

Req/Sec 50.03 110.56 474.00 93.55%

2800 requests in 30.08s, 434.77KB read

Socket errors: connect 0, read 0, write 0, timeout 2800

Requests/sec: 93.08

Transfer/sec: 14.45KB-

/api/v1/tomcat/delay/reactive-scheduler/2

rubn ⲁƛ ▸ wrk -t4 -c200 -d30s http://192.168.1.219:8081/api/v1/tomcat/delay/reactive-scheduler/2

Running 30s test @ http://192.168.1.219:8081/api/v1/tomcat/delay/reactive-scheduler/2

4 threads and 200 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 335.54ms 201.74ms 931.05ms 63.30%

Req/Sec 158.49 97.59 575.00 65.48%

17986 requests in 30.06s, 3.10MB read

Requests/sec: 598.39

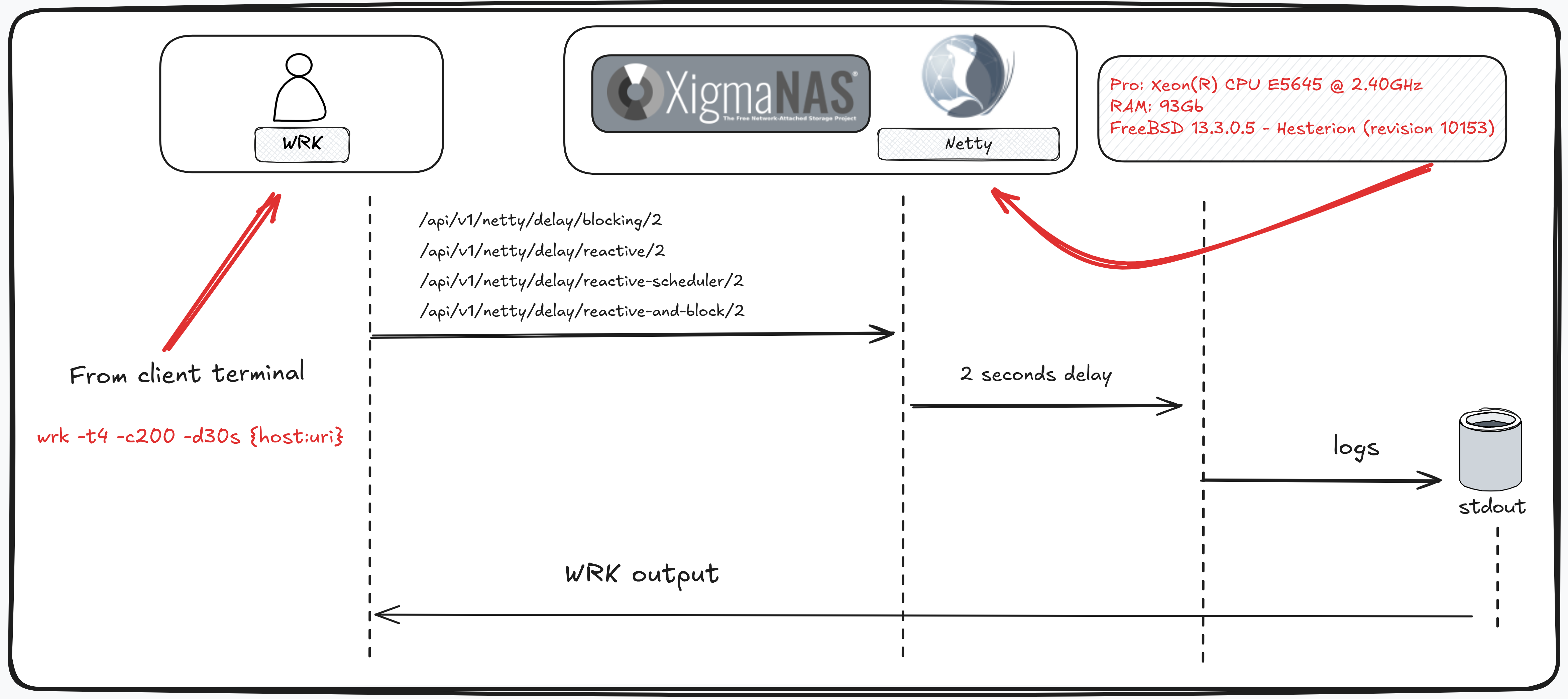

Transfer/sec: 105.77KBServer reactor Netty

wrk -t4 -c200 -d30s http://192.168.1.219:8082/api/v1/netty/delay/blocking/2

wrk -t4 -c200 -d30s http://192.168.1.219:8082/api/v1/netty/delay/reactive/2

wrk -t4 -c200 -d30s http://192.168.1.219:8082/api/v1/netty/delay/reactive-scheduler/2

wrk -t4 -c200 -d30s http://192.168.1.219:8082/api/v1/netty/delay/reactive-and-block/2Pruebas con cable UTP directo a mi switch NAS

-

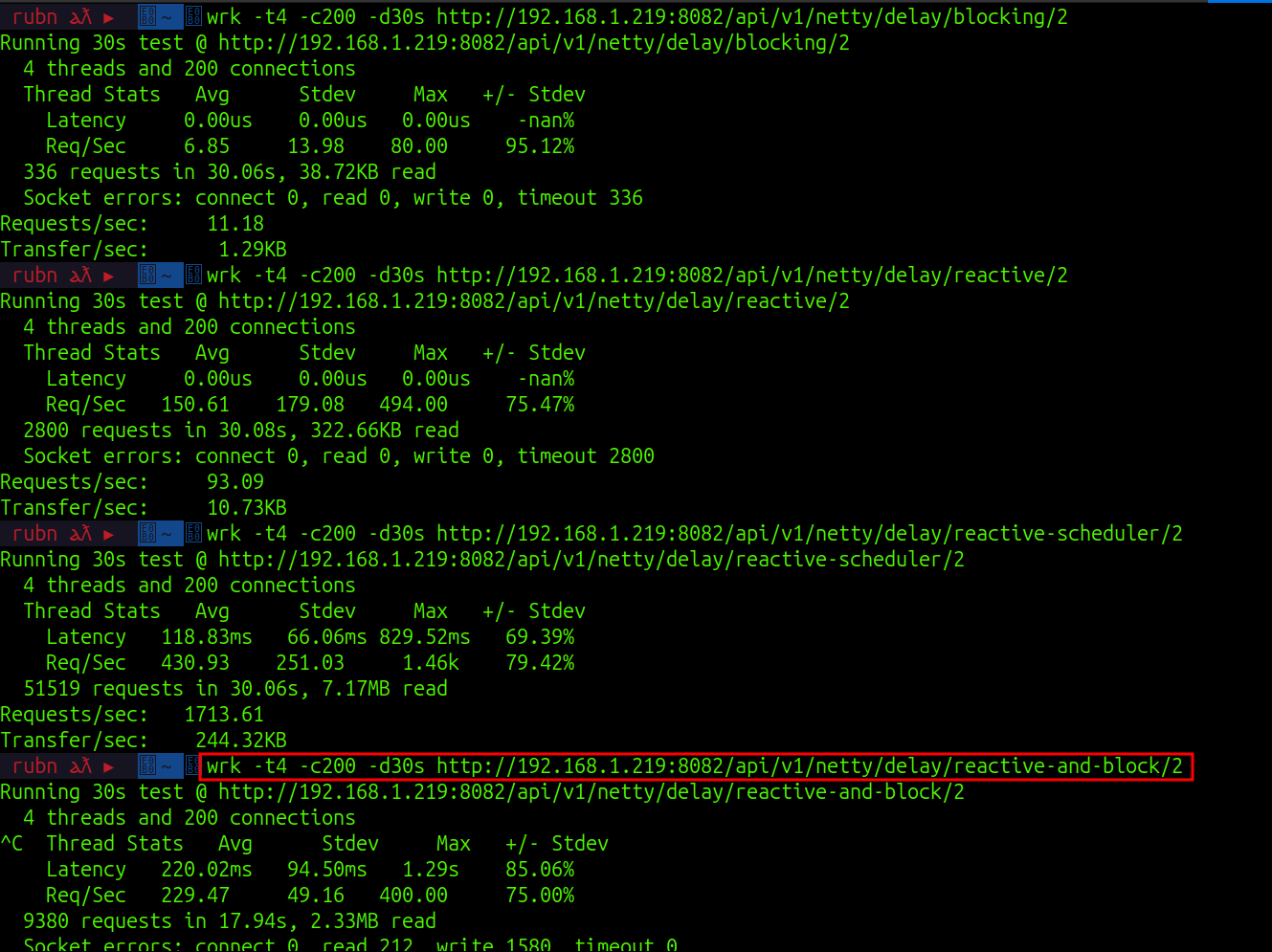

/api/v1/netty/delay/blocking/2

rubn ⲁƛ ▸ wrk -t4 -c200 -d30s http://192.168.1.219:8082/api/v1/netty/delay/blocking/2

Running 30s test @ http://192.168.1.219:8082/api/v1/netty/delay/blocking/2

4 threads and 200 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 0.00us 0.00us 0.00us -nan%

Req/Sec 6.85 13.98 80.00 95.12%

336 requests in 30.06s, 38.72KB read

Socket errors: connect 0, read 0, write 0, timeout 336

Requests/sec: 11.18

Transfer/sec: 1.29KB-

/api/v1/netty/delay/reactive/2

rubn ⲁƛ ▸ wrk -t4 -c200 -d30s http://192.168.1.219:8082/api/v1/netty/delay/reactive/2

Running 30s test @ http://192.168.1.219:8082/api/v1/netty/delay/reactive/2

4 threads and 200 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 0.00us 0.00us 0.00us -nan%

Req/Sec 150.61 179.08 494.00 75.47%

2800 requests in 30.08s, 322.66KB read

Socket errors: connect 0, read 0, write 0, timeout 2800

Requests/sec: 93.09

Transfer/sec: 10.73KB-

/api/v1/netty/delay/reactive-scheduler/2

rubn ⲁƛ ▸ wrk -t4 -c200 -d30s http://192.168.1.219:8082/api/v1/netty/delay/reactive-scheduler/2

Running 30s test @ http://192.168.1.219:8082/api/v1/netty/delay/reactive-scheduler/2

4 threads and 200 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 0.00us 0.00us 0.00us -nan%

Req/Sec 88.21 88.34 330.00 79.17%

2800 requests in 30.06s, 382.81KB read

Socket errors: connect 0, read 0, write 0, timeout 2800

Requests/sec: 93.15 (1)

Transfer/sec: 12.74KB| 1 | 93.15 request por segundo |

Y este endpoint no podemos invocarlo reactive-and-block/2 dado que es bloqueante

//Endpoint reactivo con el operador block()

@GetMapping("/delay/reactive-and-block/{seconds}")

public String delayReactiveWithBlock(@PathVariable int seconds) {

return Mono.delay(Duration.ofSeconds(seconds))

.map(i -> "OK (reactive with block()) after " + seconds + "s on " + serverType())

.log()

.block();

}Bastante extraño que no podamos hacerlo, dado que, si se puede hacer un Thread.sleep

Esto no logramos invocarlo

Pruebas sin cable, por WIFI al NAS

-

/api/v1/netty/delay/blocking/2

rubn ⲁƛ ▸ wrk -t4 -c200 -d30s http://192.168.1.219:8082/api/v1/netty/delay/blocking/2

Running 30s test @ http://192.168.1.219:8082/api/v1/netty/delay/blocking/2

4 threads and 200 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 0.00us 0.00us 0.00us -nan%

Req/Sec 5.00 11.36 70.00 94.55%

336 requests in 30.08s, 38.72KB read

Socket errors: connect 0, read 0, write 0, timeout 336

Requests/sec: 11.17

Transfer/sec: 1.29KB-

/api/v1/netty/delay/reactive/2

rubn ⲁƛ ▸ wrk -t4 -c200 -d30s http://192.168.1.219:8082/api/v1/netty/delay/reactive/2

Running 30s test @ http://192.168.1.219:8082/api/v1/netty/delay/reactive/2

4 threads and 200 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 0.00us 0.00us 0.00us -nan%

Req/Sec 42.38 83.30 494.00 95.38%

2800 requests in 30.10s, 322.66KB read

Socket errors: connect 0, read 0, write 0, timeout 2800

Requests/sec: 93.04

Transfer/sec: 10.72KB-

/api/v1/netty/delay/reactive-scheduler/2

rubn ⲁƛ ▸ wrk -t4 -c200 -d30s http://192.168.1.219:8082/api/v1/netty/delay/reactive-scheduler/2

Running 30s test @ http://192.168.1.219:8082/api/v1/netty/delay/reactive-scheduler/2

4 threads and 200 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 119.66ms 60.33ms 740.92ms 70.44%

Req/Sec 424.60 213.12 1.35k 74.75%

50792 requests in 30.06s, 7.07MB read

Requests/sec: XXX (1)

Transfer/sec: 240.90KB| 1 | Al menos es algo. |