A simple example where the java Stream api is used, to extract the words and count the number of repetitions,

for this we use a function to eliminate the characters in this case will be, [.,!] and spacing character only one

so " " plus the Strings that are empty so "", that we must filter.

private String normalize(final String word) {

return word.toLowerCase().replaceAll("[\\.\\,\\!]", ""); (1)

}| 1 | The regular expression I came up with is this [\\.\\,\\!]

using double backslash, all matches of periods, commas, exclamation, we

will also convert them to lowercase, with toLowerCase together with the replaceAll method. |

|

Our target text is as follows:

Hi there, welcome to BettaTech, If you are liking this video, subscribe and hit the little bell to see the new videos that I upload! |

final String[] words = "Hello, how are you?,

" welcome to BettaTech, If you are liking it " +

"this video, subscribe and click on the little bell" +

" to see the new videos that I upload!".split(" "); (1)| 1 | We introduce this String in a String array and invoke the split method, with a blank character as parameter, so that after each

space it stores each word in a position of our array. |

System.out.printf("%-15s%s%n","Word","Frecuency"); (1)| 1 | Print with printf a formatting of 15 characters spacing |

.filter(this::filterEmptyAndNull) (1)| 1 | We invoke the filter method of the Stream, the filter phase for the empty and null characters of our String,

to make the code more compact, we pass the method itself by reference method::reference. |

private boolean filterEmptyAndNull(final String word) {

return Objects.nonNull(word) && !"".equals(word);

}.map(this::normalize) (1)| 1 | The map function, which is the mapping phase of the stream, in this case we do a method::reference

since the normalize method is a function with a parameter and returns an object, fitting our map perfectly. |

.collect(Collectors.groupingBy(e -> e,Collectors.counting())) (1)| 1 | We make a mutable reduction to this Stream with collect, where the groupingBy method tells the

collect that it must group all the elements in a Map, the first parameter is to return the same value passed

in the lambda expression being a very common operation we could use either e -> e or Function.identity(),

the second parameter is a downstream collector Collectors.counting() that will allow us to count the

repeated elements, allowing us to create the value of each key. |

package com.test.frencuencywords.service;

import lombok.NonNull;

import org.springframework.stereotype.Service;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Path;

import java.util.Arrays;

import java.util.Map;

import java.util.Objects;

import java.util.function.Function;

import java.util.stream.Collectors;

import java.util.stream.Stream;

/**

* @author rubn

*/

@Service

public class FrecuencyWordsService {

private static final String REG_EXP = "[\\.\\,\\!]";

private static final String SPLIT_ESPACE_CARACTER = " ";

/**

*

* Process with parallelStream()

*

* @param words to count

* @return Map<String,Long> with key(word), and value(frecuency)

*/

public Map<String,Long> frecuencyWordsParallel(@NonNull final String words) {

final String[] splitWords = words.split(SPLIT_ESPACE_CARACTER);

return Arrays.stream(splitWords)

.parallel() (1)

.filter(this::filterEmptyAndNull)

.map(this::normalize)

.collect(Collectors.groupingBy(Function.identity(),Collectors.counting()));

}

/**

*

* Process with stream()

*

* @param words to count

* @return Map<String,Long> with key(word), and value(frecuency)

*/

public Map<String,Long> frecuencyWords(@NonNull final String words) {

final String[] splitWords = words.split(SPLIT_ESPACE_CARACTER);

return Arrays.stream(splitWords)

.filter(this::filterEmptyAndNull)

.map(this::normalize)

.collect(Collectors.groupingBy(Function.identity(),Collectors.counting()));

}

/**

*

* @param input path

* @return Map<String,Long> with key(word), and value(frecuency)

*/

public Map<String,Long> frecuencyWordsFromFile(final Path input) { (2)

try (final Stream<String> lines = Files.lines(input)) { (3)

return Arrays.stream(lines.collect(Collectors.joining()) (4)

.split(SPLIT_ESPACE_CARACTER))

.filter(this::filterEmptyAndNull)

.map(this::normalize)

.collect(Collectors.groupingBy(Function.identity(),Collectors.counting()));

} catch (IOException ex) {

throw new RuntimeException(ex);

}

}

private boolean filterEmptyAndNull(final String word) {

return Objects.nonNull(word) && !"".equals(word);

}

private String normalize(final String word) {

return word.toLowerCase().replaceAll(REG_EXP, "");

}

}| 1 | Using the parallel method, often this does not give a noticeable increase in performance, it is rarely used. |

| 2 | We use the path parameter that will contain the path of the input or file in this case, that we want to read. |

| 3 | We use the lines method, to read the path and get a Streams of Strings, something special is that it does not load all the content in memory. 🥰. |

| 4 | We collect the whole Stream of Strings in a String. |

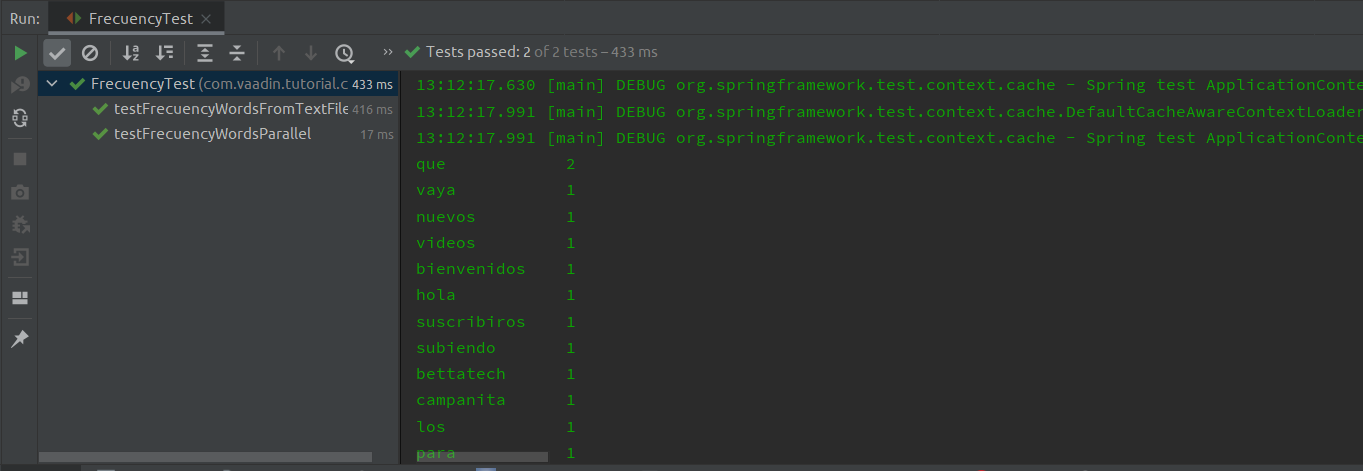

Final test and also with reading of file containing the same target text

package com.test.frencuencywords;

import com.test.frencuencywords.service.FrecuencyWordsService;

import com.test.frencuencywords.util.Memory;

import lombok.extern.log4j.Log4j2;

import org.junit.jupiter.api.Assertions;

import org.junit.jupiter.api.DisplayName;

import org.junit.jupiter.api.Test;

import org.junit.jupiter.api.extension.ExtendWith;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.test.context.ContextConfiguration;

import org.springframework.test.context.junit.jupiter.SpringExtension;

import java.io.*;

import java.nio.charset.StandardCharsets;

import java.nio.file.Files;

import java.nio.file.Path;

import java.util.Map;

import java.util.stream.Collectors;

import static org.assertj.core.api.AssertionsForInterfaceTypes.assertThat;

/**

* @author rubn

*

* Simple test for frecuency words

*/

@Log4j2

@ExtendWith(SpringExtension.class)

@ContextConfiguration(classes = {FrecuencyWordsService.class,Memory.class})

@DisplayName("Frecuency words, reading text, sync, async from String or File")

class FrecuencyTest {

private static final String FORMAT_PRINTF_15 = "%-15s%s";

private static final String WORD = "Word";

private static final String HOLA = "hola";

private static final String FRECUENCY = "Frecuency";

private static final Path PATH_TEXT_FILE = Path.of("src/test/resources/textoSimple.txt");

@Autowired

private FrecuencyWordsService frecuencyWordsService;

@Autowired

private Memory memory;

private final String WORDS = "Hi there, welcome to BettaTech, If you are liking this video, subscribe and hit the little bell to see the new videos that I upload!";

@Test

@DisplayName("reading text from String text with blockingIO")

void testFrecuencyWordsNull() {

log.info("Run Frecuency");

log.info(String.format(FORMAT_PRINTF_15,WORD,FRECUENCY));

final Map<String,Long> mapWordsSync = frecuencyWordsService.frecuencyWords(WORDS);

Assertions.assertNotNull(mapWordsSync);

mapWordsSync.forEach((word, frecuency) -> { (1)

log.info(String.format(FORMAT_PRINTF_15, word, frecuency));

});

final long actual = mapWordsSync.get(HOLA);

final long expected = 1;

assertThat(actual).isEqualTo(expected);

/*

* total memory consumption

*/

log.info(memory.getTotalMemory());

}

@Test

@DisplayName("reading text from String text parallel")

void testFrecuencyWordsParallel() {

log.info("Run Frecuency Parallel");

log.info(String.format(FORMAT_PRINTF_15,WORD,FRECUENCY));

final Map<String,Long> mapWordsParallel = frecuencyWordsService.frecuencyWordsParallel(WORDS);

Assertions.assertNotNull(mapWordsParallel);

mapWordsParallel.forEach((word, frecuency) -> {

log.info(String.format(FORMAT_PRINTF_15, word, frecuency));

});

final long actual = mapWordsParallel.get(HOLA);

final long expected = 1;

assertThat(actual).isEqualTo(expected);

/*

* total memory consumption

*/

log.info(memory.getTotalMemory());

}

@Test

@DisplayName("reading text from input with 'BufferedReader' ")

void testFrecuencyWordsFromTextFile() {

if(Files.exists(PATH_TEXT_FILE)) {

log.info("Run Frecuency Parallel");

try (final InputStream inputStream = this.getClass().getResourceAsStream("/textoSimple.txt");

final Reader reader = new InputStreamReader(inputStream, StandardCharsets.UTF_8);

final BufferedReader br = new BufferedReader(reader)) {

final StringBuilder sb = new StringBuilder();

//read lines with Stream api

sb.append(br.lines().collect(Collectors.joining()));

final Map<String, Long> mapWordsParallel = frecuencyWordsService.frecuencyWords(sb.toString());

log.info(String.format(FORMAT_PRINTF_15,WORD,FRECUENCY));

mapWordsParallel.forEach((word, frecuency) -> {

log.info(String.format(FORMAT_PRINTF_15, word, frecuency));

});

final long result = mapWordsParallel.get(HOLA);

final long expected = 1;

assertThat(result).isEqualTo(expected);

} catch (IOException ex) {

log.error(ex.getMessage());

}

/*

* total memory consumption

*/

log.info(memory.getTotalMemory());

} else {

throw new RuntimeException("File not found!");

}

}

@Test

@DisplayName("reading text from input with 'Files.lines' ")

void frecuencyFromPath() {

log.info("Run Frecuency Parallel");

final Map<String,Long> mapWordsParallel = frecuencyWordsService.frecuencyWordsFromFile(PATH_TEXT_FILE);

log.info(String.format(FORMAT_PRINTF_15, WORD,FRECUENCY));

mapWordsParallel.forEach((word, frecuency) -> {

log.info(String.format(FORMAT_PRINTF_15, word, frecuency));

});

final long result = mapWordsParallel.get(HOLA);

final long expected = 1;

assertThat(result).isEqualTo(expected);

/*

* total memory consumption

*/

log.info(memory.getTotalMemory());

}

}| 1 | Traversing the stream with a forEach, but as our collect already returns a Map<String,Long>

the forEach of the Map interface, has a BiConsumer as parameter, and this in turn, the accept method, which has 2

generic parameters, one for the key and one for the value, forEach((key,value) -> {}). |

Console output with method using parallel streams

15:35:14.030 [main] INFO com.test.frencuencywords.FrecuencyTest - Run Frecuency Parallel

15:35:14.035 [main] INFO com.test.frencuencywords.FrecuencyTest - Word Frecuency

15:35:14.035 [main] INFO com.test.frencuencywords.FrecuencyTest - darle 1

15:35:14.036 [main] INFO com.test.frencuencywords.FrecuencyTest - que 2

15:35:14.036 [main] INFO com.test.frencuencywords.FrecuencyTest - a 2

15:35:14.036 [main] INFO com.test.frencuencywords.FrecuencyTest - vaya 1

15:35:14.036 [main] INFO com.test.frencuencywords.FrecuencyTest - os 1

15:35:14.036 [main] INFO com.test.frencuencywords.FrecuencyTest - nuevos 1

15:35:14.036 [main] INFO com.test.frencuencywords.FrecuencyTest - videos 1

15:35:14.036 [main] INFO com.test.frencuencywords.FrecuencyTest - bienvenidos 1

15:35:14.036 [main] INFO com.test.frencuencywords.FrecuencyTest - hola 1

15:35:14.036 [main] INFO com.test.frencuencywords.FrecuencyTest - verlos 1

15:35:14.036 [main] INFO com.test.frencuencywords.FrecuencyTest - suscribiros 1

15:35:14.036 [main] INFO com.test.frencuencywords.FrecuencyTest - subiendo 1

15:35:14.036 [main] INFO com.test.frencuencywords.FrecuencyTest - bettatech 1

15:35:14.036 [main] INFO com.test.frencuencywords.FrecuencyTest - este 1

15:35:14.036 [main] INFO com.test.frencuencywords.FrecuencyTest - campanita 1

15:35:14.036 [main] INFO com.test.frencuencywords.FrecuencyTest - para 1

15:35:14.037 [main] INFO com.test.frencuencywords.FrecuencyTest - está 1

15:35:14.037 [main] INFO com.test.frencuencywords.FrecuencyTest - la 1

15:35:14.037 [main] INFO com.test.frencuencywords.FrecuencyTest - si 1

15:35:14.037 [main] INFO com.test.frencuencywords.FrecuencyTest - gustando 1

15:35:14.037 [main] INFO com.test.frencuencywords.FrecuencyTest - y 1

15:35:14.037 [main] INFO com.test.frencuencywords.FrecuencyTest - vídeo 1

15:35:14.037 [main] INFO com.test.frencuencywords.FrecuencyTest - tal 1

15:35:14.076 [main] INFO com.test.frencuencywords.FrecuencyTest - Total Memory 57MB (1)| 1 | Total memory consumption at that time, usually varies greatly depending on the hardware. |

Inpiration

According to this question was asked to a doc for an interview, where he solves this case

with TypeScript, not easy to solve at first, because there are always more efficient solutions

than others, and this case is not the exception, because my example I have 2 more ways to do it

with switch and for, where both solutions increase positions.