Hola Loom!

Thread.startVirtualThread(() -> {

System.out.println("Hello, Loom!");

});

|

Test Tools

|

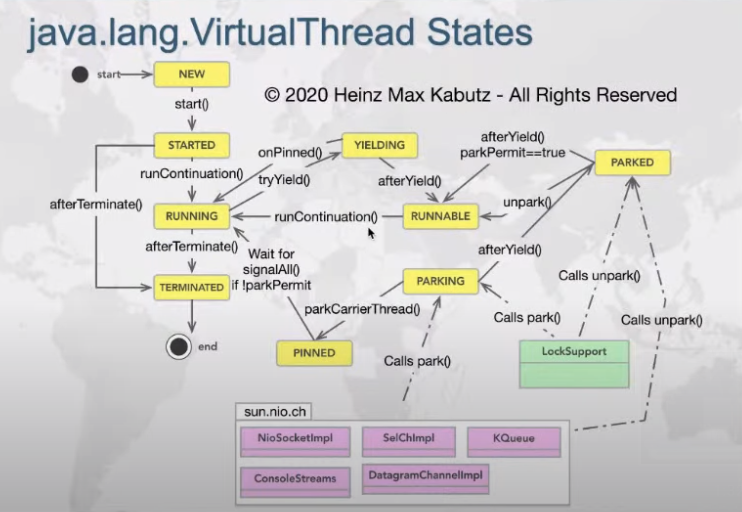

Since the end of 2017, a preview has been introduced, for the use of virtual threads, this is supposed to bring an unexpected twist to the Java world 🤔, both [1]Ron pressler like [2]Mark Reinhold are keeping us up to date on how this is going.

One of the reasons is the performance they offer, because they do not invoke the system kernel threads aka native threads, since a special context is created, apparently as Ben Evans says, very similar to the existing ones. green threads.

[3]Ben Evans OpenJDK’s Project Loom aims, as its primary goal, to revisit this long-standing implementation and instead enable new Thread objects that can execute code but do not directly correspond to dedicated OS threads. Or, to put it another way, Project Loom creates an execution model where an object that represents an execution context is not necessarily a thing that needs to be scheduled by the OS. Therefore, in some respects, Project Loom is a return to something similar to green threads.

| It could be said that it is not a new concept, but another implementation. |

Project loom vs Project reactor ?

| Are you sure Batman and Superman never worked together? 🤙🏿 |

It was said that with project loom there would be no need for reactive programming, but in reality, both can complement each other.

Adding that even with the use of virtual threads, creating a thread with code is not so easy to read, compose, most of the time, there is a strong point that gives us project reactor.

Olek Dokuka with Andrii Rodionov

Running a pair of virtual threads

|

Hardware for this example 🔥

|

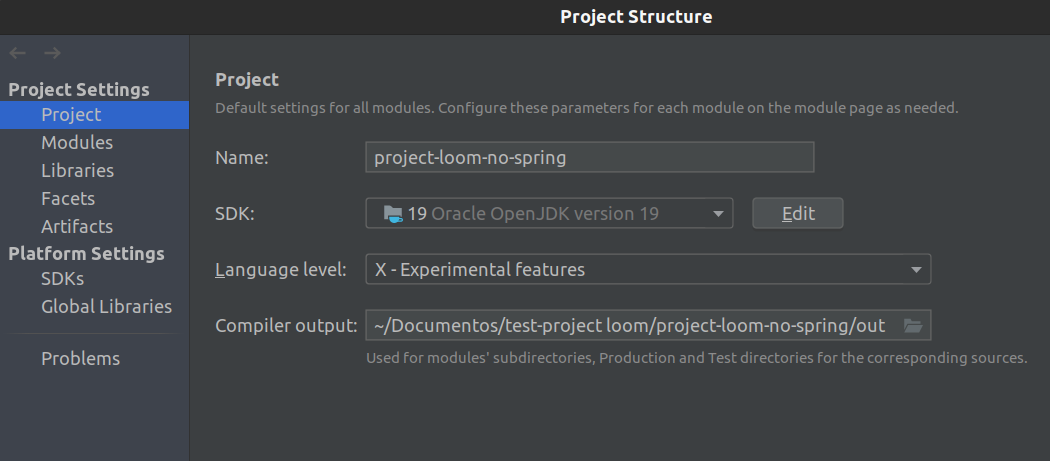

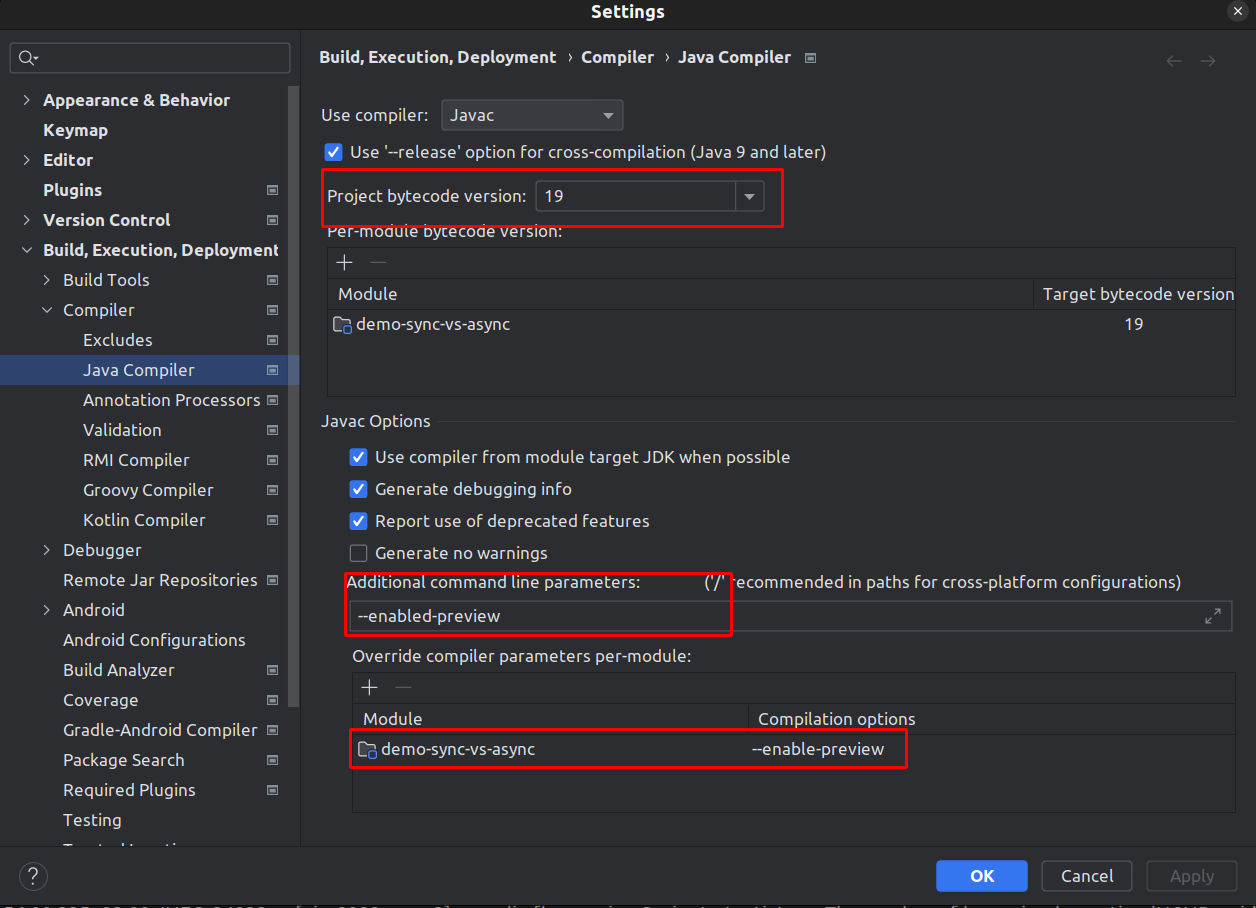

We are going to test jdk19, but we must enable a flag in the JVM, and some settings in the IDE, at the same time that tells the compiler to enable the new features/previews.

- Project Structure

Use as shown in the picture.

⚙ Settings

This is the famous flag

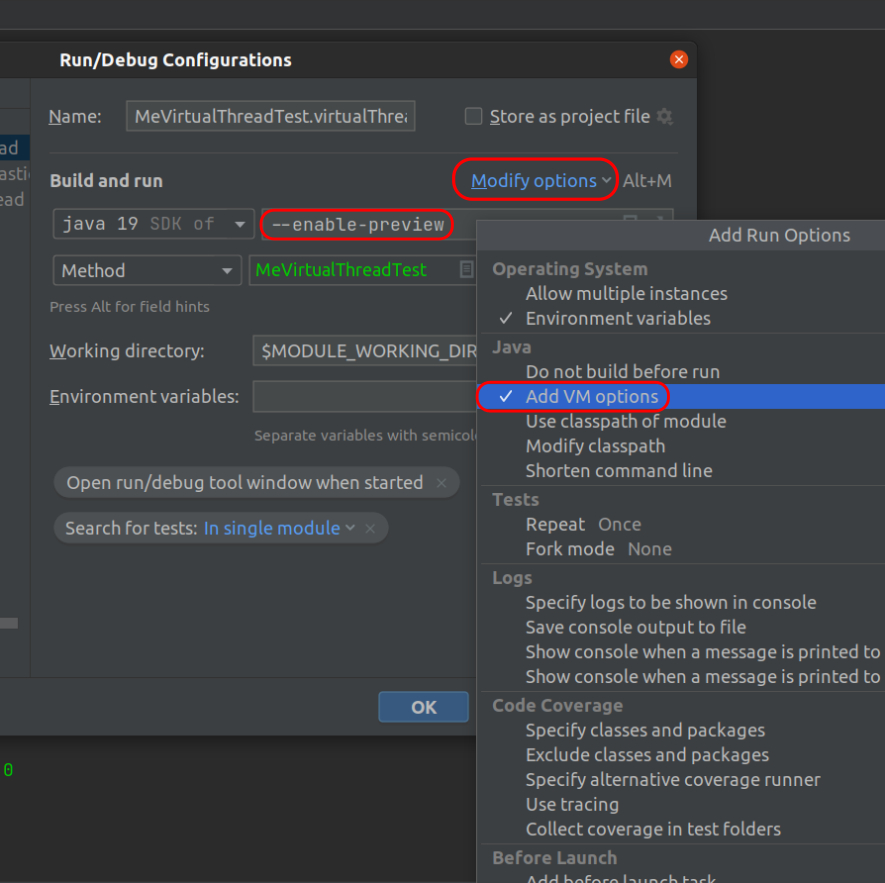

--enable-preview- Run/Debug Configurations

Use the following options

Añadir también -XX:+AllowRedefinitionToAddDeleteMethods

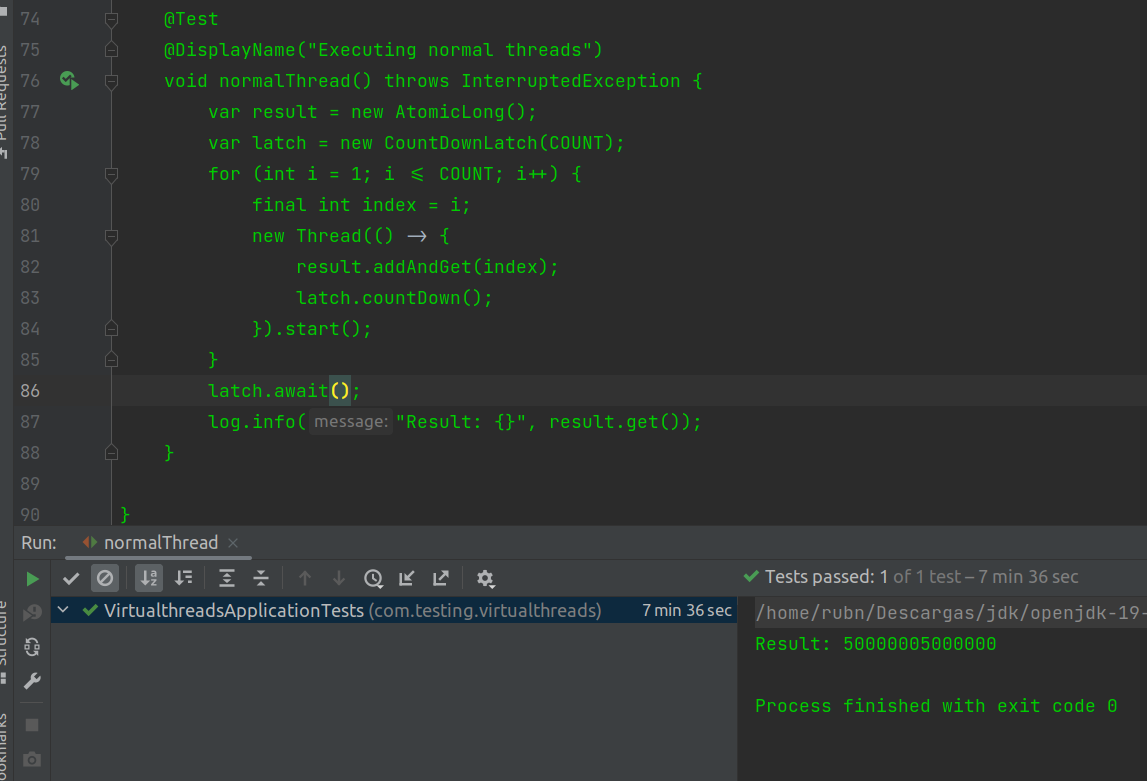

A normal OS thread

| The COUNT constant actually equals 10 million threads |

@Test

@DisplayName("Executing normal threads")

void normalThread() throws InterruptedException {

var result = new AtomicLong();

var latch = new CountDownLatch(COUNT); (1)

for (int i = 1; i <= COUNT; i++) { (2)

final int index = i;

new Thread(() -> { (3)

result.addAndGet(index);

latch.countDown();

}).start();

}

latch.await(); (4)

log.info("Result: {}", result.get());

assertThat(result.get()).isEqualTo(RESULT);

}| 1 | The constant of 10_000_000. |

| 2 | A for cycle of the size of that constant. |

| 3 | A typical yarn of a lifetime, fire and forget not suitable for production. |

| 4 | This latch knows the amount of time to wait, without invoking the vulgar get or join. |

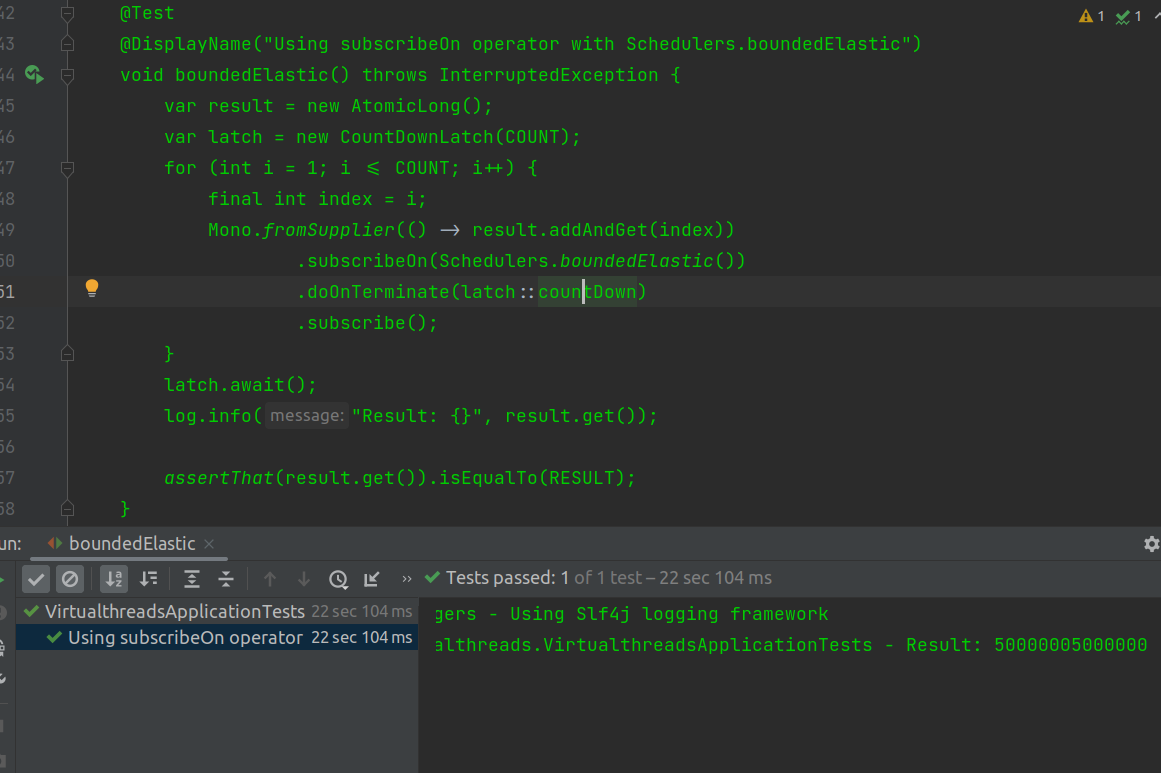

With the Scheduler of Simon Baslé

He was the author of this Scheduler called boundedElastic, to supplant the deprecated elastic.

@Test

@DisplayName("Using subscribeOn operator with Schedulers.boundedElastic")

void boundedElastic() throws InterruptedException {

var result = new AtomicLong();

var latch = new CountDownLatch(COUNT);

for (int i = 1; i <= COUNT; i++) {

final int index = i;

Mono.fromSupplier(() -> result.addAndGet(index))

.subscribeOn(Schedulers.boundedElastic()) (1)

.doOnTerminate(latch::countDown)

.subscribe();

}

latch.await();

log.info("Result: {}", result.get());

assertThat(result.get()).isEqualTo(RESULT);

}| 1 | By setting the boundedElastic in this way, the entire reactive stream will be affected by context switching, thanks to the subscribeOn operator, forcing the use of this Scheduler. |

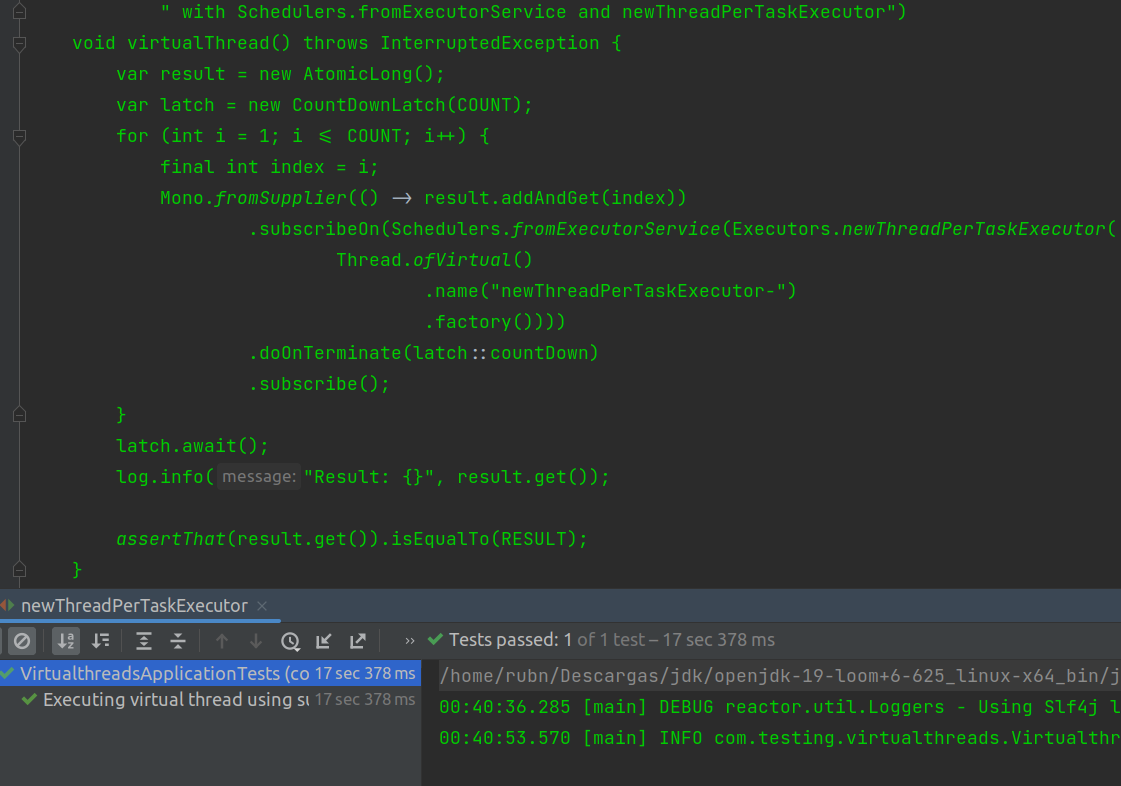

Case 1

| newVirtualThreadPerTaskExecutor |

@Test

@SneakyThrows

@DisplayName("Executing virtual thread using subscribeOn operator " +

" with Schedulers.fromExecutorService and newVirtualThreadPerTaskExecutor")

void virtualThread() {

var result = new AtomicLong();

var latch = new CountDownLatch(COUNT);

for (int i = 1; i <= COUNT; i++) {

final int index = i;

Mono.fromSupplier(() -> result.addAndGet(index))

.subscribeOn(Schedulers.fromExecutorService(

Executors.newVirtualThreadPerTaskExecutor())) (1)

.doOnTerminate(latch::countDown)

.subscribe();

}

latch.await();

log.info("Result: {}", result.get());

assertThat(result.get()).isEqualTo(RESULT);

}| 1 | We set up our virtual thread. |

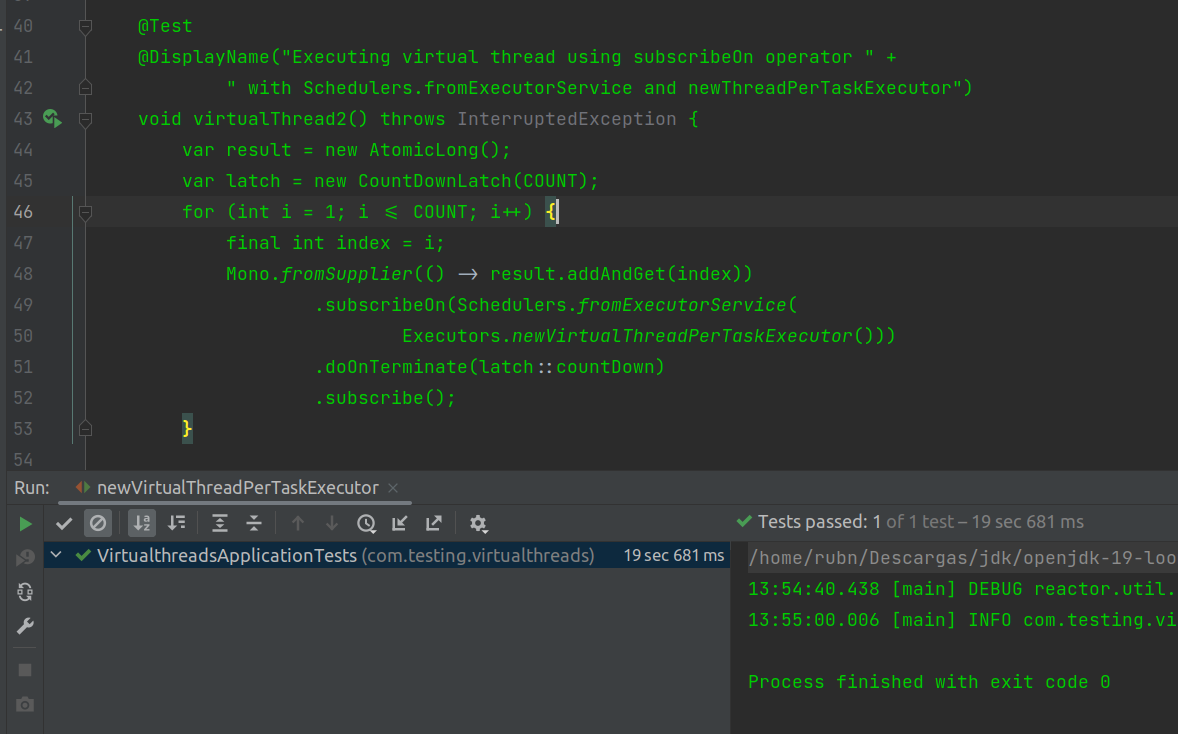

Caso 2

| newThreadPerTaskExecutor |

@Test

@SneakyThrows

@DisplayName("Executing virtual thread using subscribeOn operator " +

" with Schedulers.fromExecutorService and newThreadPerTaskExecutor- and factory")

void virtualThread2() {

var result = new AtomicLong();

var latch = new CountDownLatch(COUNT);

for (int i = 1; i <= COUNT; i++) {

final int index = i;

Mono.fromSupplier(() -> result.addAndGet(index))

.subscribeOn(Schedulers.fromExecutorService(Executors.newThreadPerTaskExecutor(

Thread.ofVirtual()

.name("newThreadPerTaskExecutor-") (1)

.factory())))

.doOnTerminate(latch::countDown)

.subscribe();

}

latch.await();

log.info("Result: {}", result.get());

assertThat(result.get()).isEqualTo(RESULT);

}| 1 | Using factory to set it to the constructor a virtual thread. |

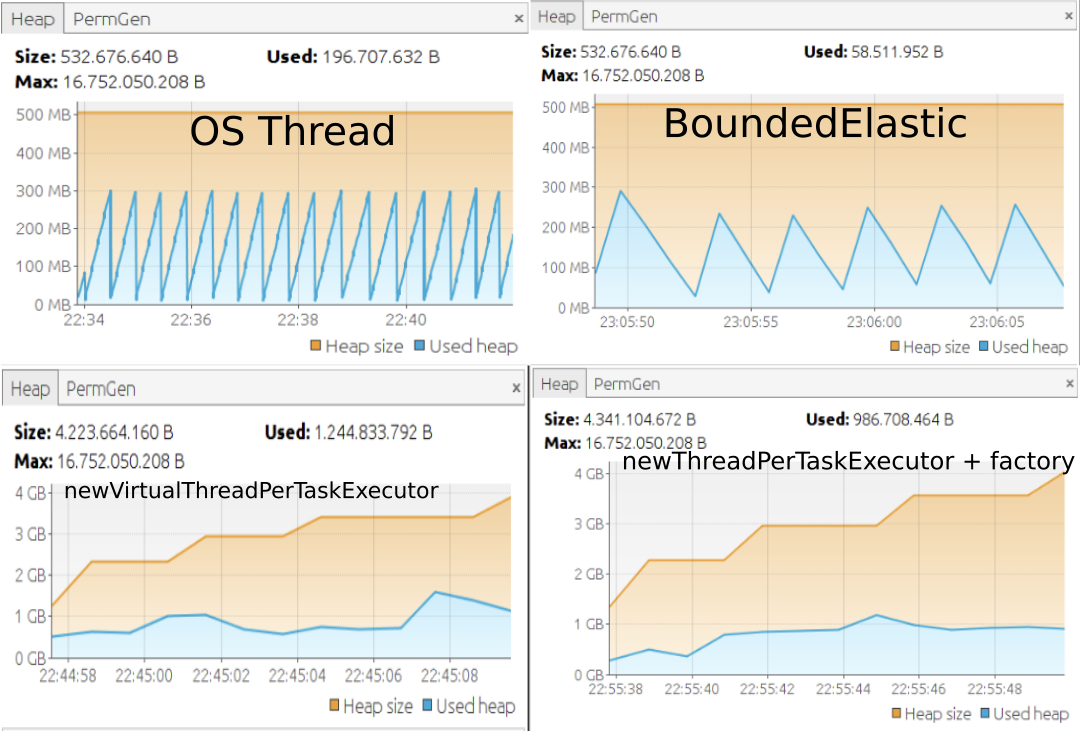

Summary table

| N# Hilos | Tipo de hilo/Scheduler | Tiempo aproximado |

|---|---|---|

10 millones |

OS thread/Un Thread Típico |

|

10 millones |

boundedElastic |

|

10 millones |

Caso 1 |

|

10 millones |

Caso 2 + factory |

|

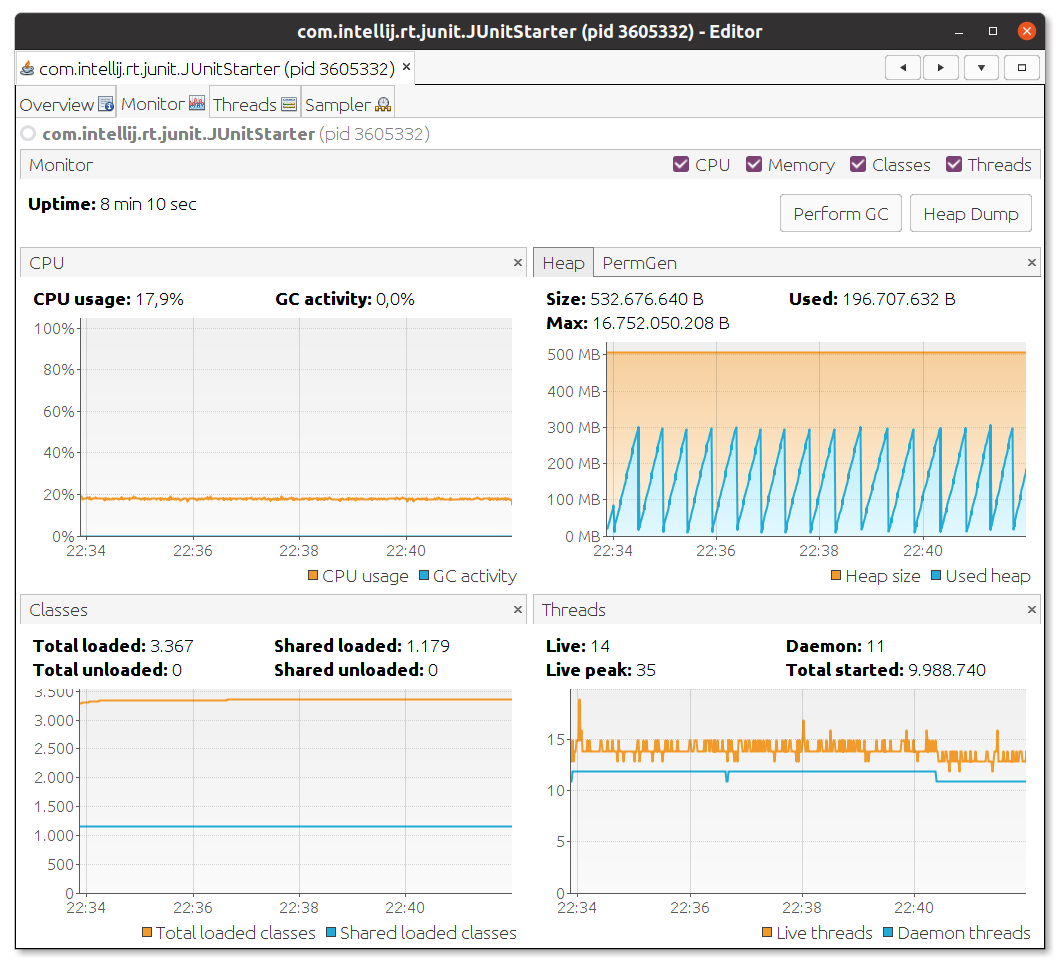

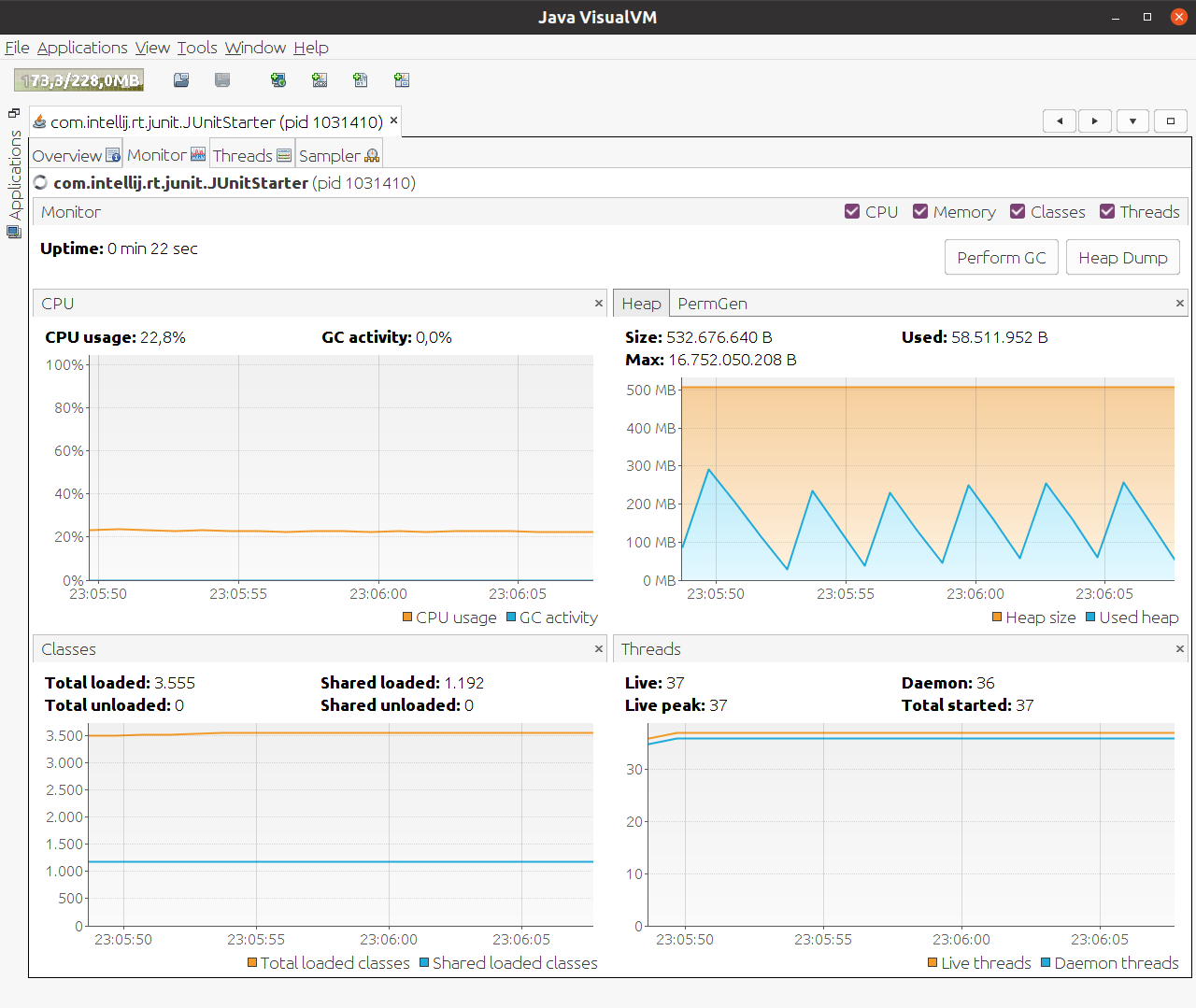

An ordinary reactive stream doesn’t lag as far behind as I imagined 🤔 Interesting!!!

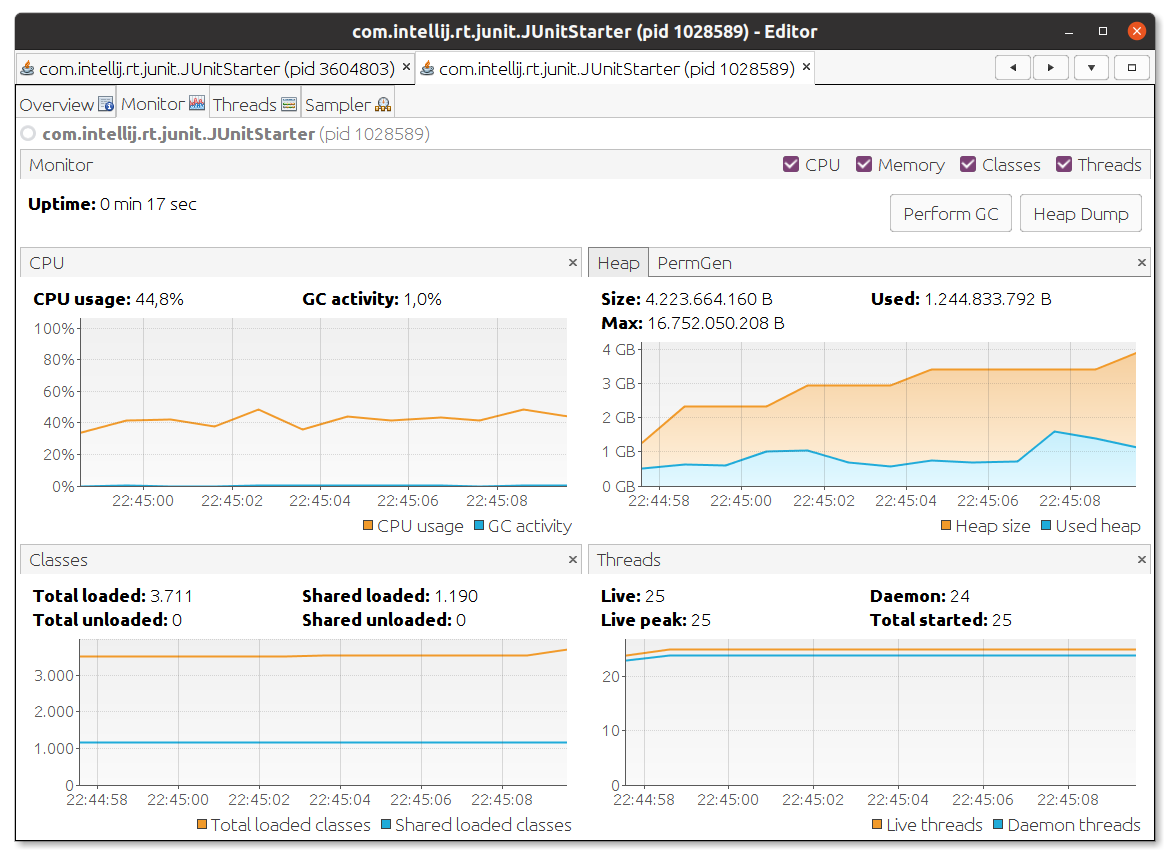

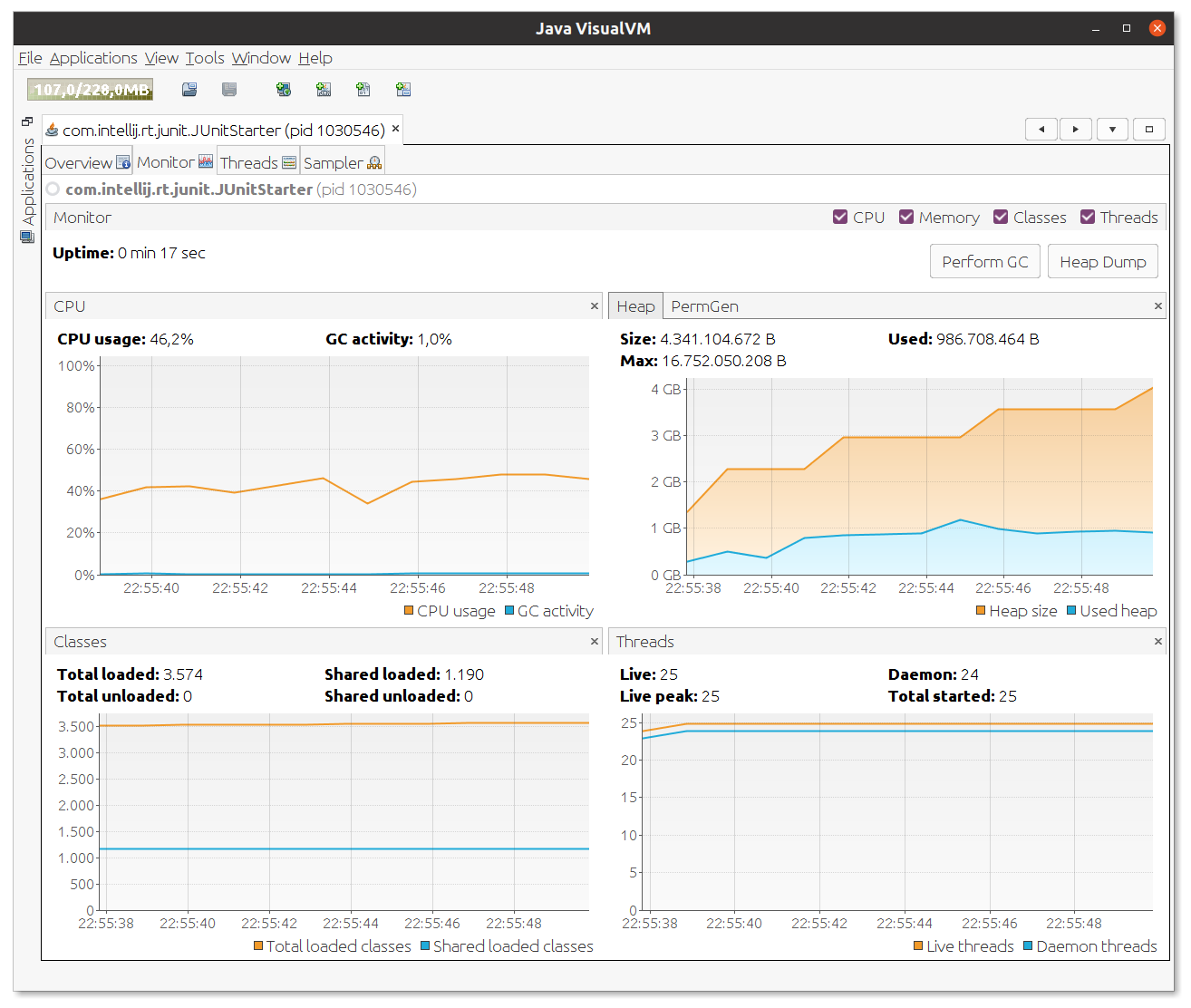

Also, looking at the virtual threads at first they seem to generate a certain peak in memory and CPU, but not for long, that’s for sure. 🔥

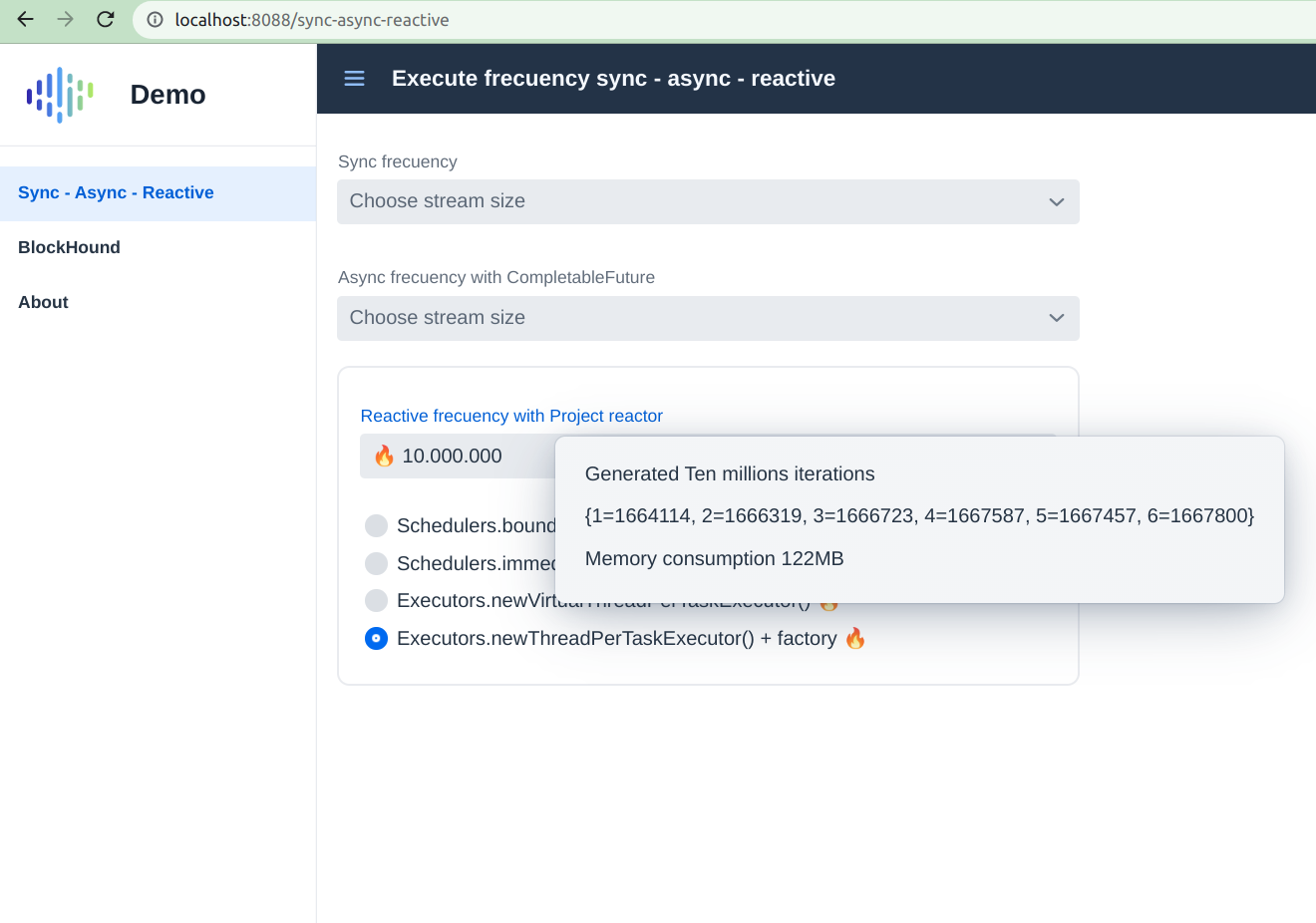

What about Vaadin ?

For example if we adjust our combo, it would look like this

How to measure the Throughput ?

The above example does not prove much and even, it would seem, they do it just as fast 🤣.

The ideal would be to do a better load test, with JMeter, artillery, gatling etc, to see how many requests these paradigms can process, it is assumed that in a reactive way they would process more requests, or double the amount.

Speed of response is not a value to be guided by.

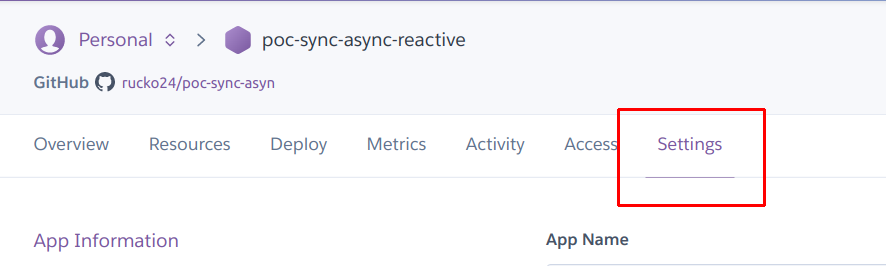

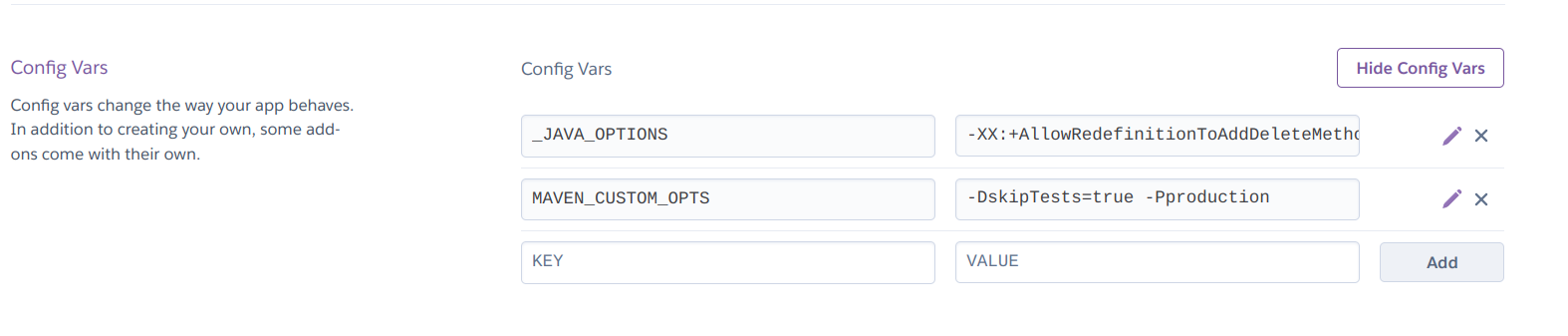

Parameters to display in heroku

To make the deployment in heroku it is necessary to add the flags, also for BlockHound because we have a superior jdk to the +Jdk13 and complain to us

The properties are separated by commas ,

| -XX:+AllowRedefinitionToAddDeleteMethods --enable-preview |

Heroku no rules!

Personalmente el plan de heroku básico, ya no me llama la atención, y oracle saca una ventaja muy grande, pero eso sera en otro post.